Now Live for Early Access

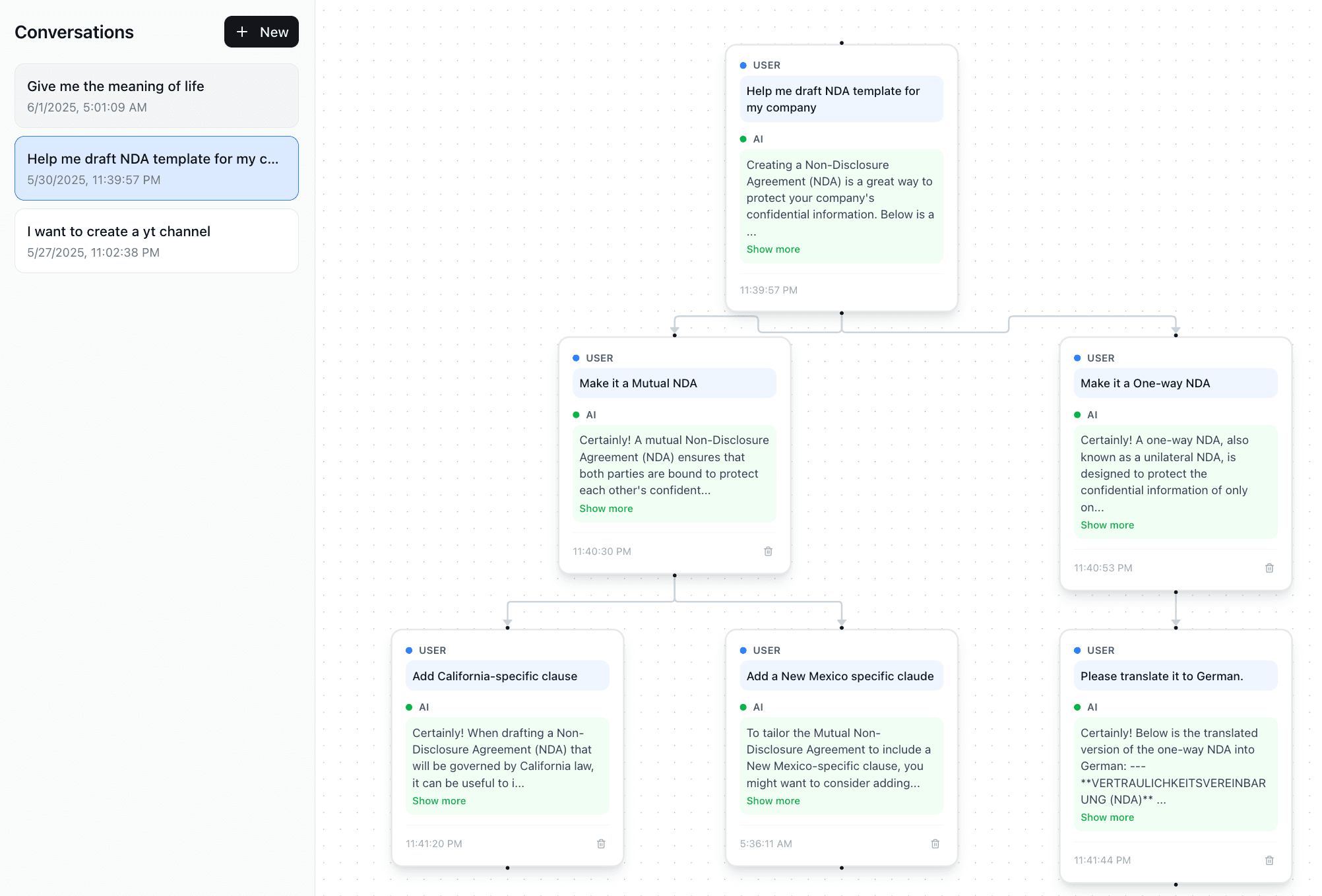

The Problem with Today's Chatbots

Modern AI conversations are broken. They cram everything into one endless scroll, making it impossible to maintain context, control costs, or explore different conversation paths.

Branch Anywhere

Fork conversations at any point to explore tangents without cluttering the main thread.

Smarter Context

Focuses only on the most relevant conversation path, reducing token usage and improving response quality.

Modular Memory

Auto-summarize and index older branches for efficient recall when needed.

Simple Integration

Just two endpoints (POST /branch, GET /context) that work with any LLM provider.

Cost Efficient

Dramatically reduce token usage and API costs while improving conversation quality.

Developer Friendly

Clean API, comprehensive documentation, and SDKs for all major platforms.